Dawn of a New Day « Ray Ozzie

Dawn of a New Day

To: Executive Staff and direct reports

Date: October 28, 2010

From: Ray Ozzie

Subject: Dawn of a New Day

Five years ago, having only recently arrived at the company, I wrote

The Internet Services Disruption in order to kick off a major change management process across the company. In the opening section of that memo, I noted that about every five years our industry experiences what appears to be an inflection point that results in great turbulence and change.

In the wake of that memo, the last five years has been a time of great transformation for Microsoft. At this point we’re truly

all in with regard to services. I’m incredibly proud of the people and the work that has been done across the company, and of the way that we’ve turned this

services transformation into opportunities that will pay off for years to come.

In the realm of the service-centric ‘seamless OS’ we’re well on the path to having Windows Live serve as an optional yet natural services complement to the Windows and Office software. In the realm of ‘seamless productivity’, Office 365 and our 2010 Office, SharePoint and Live deliverables have shifted Office from being PC-centric toward now also robustly spanning the web and mobile. In ‘seamless entertainment’, Xbox Live has transformed Xbox into a real-time, social, media-rich TV experience.

And in the realm of what I referred to as our ‘services platform’, I couldn’t be more proud of what’s emerged as Windows Azure & SQL Azure. Inspired by little more than a memo, a few decks and discussions, intrapreneurial leaders stepped up to build and deliver an innovative service that, while still nascent, will over time prove to be transformational for the company and the industry.

Our products are now more relevant than ever. Bing has blossomed and its advertising, social, metadata & real-time analytics capabilities are growing to power every one of our myriad services offerings. Over the years the Windows client expanded its relevance even with the rise of low-cost netbooks. Office expanded its relevance even with a shift toward open data formats & web-based productivity. Our server assets have had greater relevance even with a marked shift toward virtualization & cloud computing.

Quite important to me, I’m also quite proud of the degree to which we’ve continued to grow and mature in the area of responsible competition, and the breadth and depth of our cultural shift toward genuine openness, interoperability and privacy which are now such key cornerstones of everything we do.

Yet, for all our great progress, some of the opportunities I laid out in my memo five years ago remain elusive and are yet to be realized.

Certain of our competitors’ products and their rapid advancement & refinement of new usage scenarios have been quite noteworthy. Our early and clear vision notwithstanding, their execution has surpassed our own in mobile experiences, in the seamless fusion of hardware & software & services, and in social networking & myriad new forms of internet-centric social interaction.

We’ve seen agile innovation playing out before a backdrop in which many dramatic changes have occurred across all aspects of our industry’s core infrastructure. These myriad evolutions of our infrastructure have been predicted for years, but in the past five years so much has happened that we’ve grown already to take many of these changes for granted: Ubiquitous internet access over wired, WiFi and 3G/4G networks; many now even take for granted that LTE and ‘whitespace’ will be broadly delivered. We’ve seen our boxy devices based on ‘system boards’ morph into sleek elegantly-designed devices based on transformational ‘systems on a chip’. We’ve seen bulky CRT monitors replaced by impossibly thin touch screens. We’ve seen business processes and entire organizations transformed by the zero-friction nature of the internet; the walls between producer and consumer having now vanished. Substantial business ecosystems have collapsed as many classic aggregation & distribution mechanisms no longer make sense.

Organizations worldwide, in every industry, are now stepping back and re-thinking the basics; questioning their most fundamental structural tenets. Doing so is necessary for their long-term growth and survival. And our own industry is no exception, where we must question our most fundamental assumptions about infrastructure & apps.

The past five years have been breathtaking. But the next five years will bring about yet another inflection point – a transformation that will once again yield unprecedented opportunities for our company and our industry catalyzed by the huge & inevitable shift in apps & infrastructure that’s truly now just begun.

Imagining A “Post-PC” World

One particular day next month, November 20

th 2010, represents a significant milestone. Those of us in the PC industry who placed an early bet on a then-nascent PC graphical UI will toast that day as being the 25

th anniversary of the launch of Windows 1.0.

Our journey began in support of audacious concepts that were originally just

imagined and dreamed:

A computer that’s ‘personal’. Or,

a PC on every desktop and in every home, running Microsoft software.

Windows may not have been the first graphical UI on a personal computer, but over time the product unquestionably democratized computing & communications for more than a billion people worldwide. Windows and Office truly grew to

define the PC; establishing the core concepts and usage scenarios that for so many of us, over time, have become etched in stone.

For the most part, we’ve grown to perceive of ‘computing’ as being equated with specific familiar ‘artifacts’ such as the ‘computer’, the ‘program’ that’s installed on a computer, and the ‘files’ that are stored on that computer’s ‘desktop’. For the majority of users, the PC is largely indistinguishable even from the ‘browser’ or ‘internet’.

As such, it’s difficult for many of us to even imagine that this could ever change.

But as the PC client and PC-based server have grown from their simple roots over the past 25 years, the PC-centric / server-centric model has accreted simply

immense complexity. This is a direct by-product of the PC’s success: how broad and diverse the PC’s ecosystem has become; how complex it’s become to manage the acquisition & lifecycle of our hardware, software, and data artifacts. It’s undeniable that some form of this complexity is readily apparent to most all our customers: your neighbors; any small business owner; the ‘tech’ head of household; enterprise IT.

Success begets product requirements. And even when superhuman engineering and design talent is applied, there are limits to how much you can apply beautiful veneers before inherent complexity is destined to bleed through.

Complexity kills. Complexity sucks the life out of users, developers and IT. Complexity makes products difficult to plan, build, test and use. Complexity introduces security challenges. Complexity causes administrator frustration.

And as time goes on and as software products mature – even with the best of intent – complexity is inescapable.

Indeed, many have pointed out that there’s a flip side to complexity: in our industry, complexity of a successful product also tends to provide some assurance of its longevity. Complex interdependencies and any product’s inherent ‘quirks’ will virtually guarantee that broadly adopted systems won’t simply vanish overnight. And so long as a system is well-supported and continues to provide unique and material value to a customer, even many of the most complex and broadly maligned assets will hold their ground. And why not? They’re valuable. They work.

But so long as customer or competitive requirements drive teams to build layers of new function on top of a complex core, ultimately a limit will be reached. Fragility can grow to constrain agility. Some deep architectural strengths can become irrelevant – or worse, can become hindrances.

Our PC software has driven the creation of an amazing ecosystem, and is incredibly valuable to a world of customers and partners. And the PC and its ecosystem is going to keep growing, and growing, for a long time to come. But today, as I wrote five years ago,

”Just as in the past, we must reflect upon what’s going on around us, and reflect upon our strengths, weaknesses and industry leadership responsibilities, and respond. As much as ever, it’s clear that if we fail to do so, our business as we know it is at risk.”

And so at this juncture, given all that has transpired in computing and communications, it’s important that all of us do precisely what our competitors and customers will ultimately do: close our eyes and form a realistic picture of what a

post-PC world might actually look like, if it were to ever truly occur. How would customers accomplish the kinds of things they do today? In what ways would it be better? In what ways would it be worse, or just different?

Those who can envision a plausible future that’s brighter than today will earn the opportunity to lead.

In our industry, if you can imagine something, you can build it. We at Microsoft know from our common past – even the past five years – that if we know what needs to be done, and if we act decisively, any challenge can be transformed into a significant opportunity. And so, the first step for each of us is to

imagine fearlessly; to dream.

Continuous Services | Connected Devices

What’s happened in every aspect of computing & communications over the course of the past five years has given us much to dream about. Certainly the ‘net-connected PC, and PC-based servers, have driven the creation of an incredible industry and have laid the groundwork for mass-market understanding of so much of what’s possible with ‘computers’. But slowly but surely, our lives, businesses and society are in the process of a

wholesale reconfiguration in the way we perceive and apply technology.

As we’ve begun to embrace today’s incredibly powerful app-capable phones and pads into our daily lives, and as we’ve embraced myriad innovative services & websites, the early adopters among us have decidedly begun to move away from mentally associating our computing activities with the hardware/software artifacts of our past such as PC’s, CD-installed programs, desktops, folders & files.

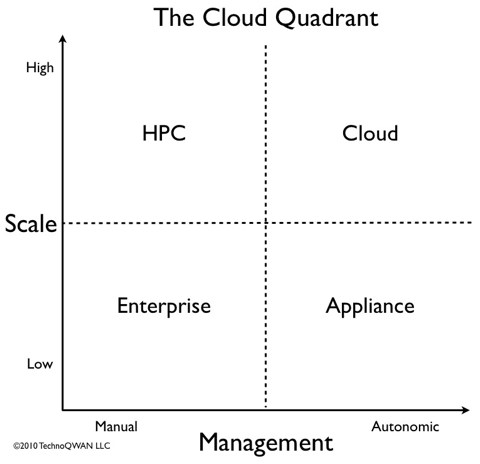

Instead, to cope with the inherent complexity of a world of devices, a world of websites, and a world of apps & personal data that is spread across myriad devices & websites, a simple conceptual model is taking shape that brings it all together. We’re moving toward a world of 1) cloud-based

continuous services that connect us all and do our bidding, and 2) appliance-like

connected devices enabling us to interact with those cloud-based services.

Continuous services are websites and cloud-based agents that we can rely on for more and more of what we do. On the back end, they possess attributes enabled by our newfound world of cloud computing: They’re always-available and are capable of unbounded scale. They’re constantly assimilating & analyzing data from both our real and online worlds. They’re constantly being refined & improved based on what works, and what doesn’t. By bringing us all together in new ways, they constantly reshape the social fabric underlying our society, organizations and lives. From news & entertainment, to transportation, to commerce, to customer service, we and our businesses and governments are being transformed by this new world of services that we rely on to operate flawlessly, 7×24, behind the scenes.

Our personal and corporate data now sits within these services – and as a result we’re more and more concerned with issues of trust & privacy. We most commonly engage and interact with these internet-based sites & services through the browser. But increasingly, we also interact with these continuous services through apps that are loaded onto a broad variety of service-connected devices – on our desks, or in our pockets & pocketbooks.

Connected devices beyond the PC will increasingly come in a breathtaking number of shapes and sizes, tuned for a broad variety of communications, creation & consumption tasks. Each individual will interact with a fairly good number of these connected devices on a daily basis – their phone / internet companion; their car; a shared public display in the conference room, living room, or hallway wall. Indeed some of these connected devices may even grow to bear a resemblance to today’s desktop PC or clamshell laptop. But there’s one key difference in tomorrow’s devices: they’re relatively simple and fundamentally

appliance-like by design, from birth. They’re instantly usable, interchangeable, and trivially replaceable without loss. But being

appliance-like doesn’t mean that they’re not also quite capable in terms of storage; rather, it just means that storage has shifted to being more cloud-centric than device-centric. A world of content – both personal and published – is streamed, cached or synchronized with a world of cloud-based continuous services.

Moving forward, these ‘connected devices’ will also frequently take the form of embedded devices of varying purpose including telemetry & control. Our world increasingly will be filled with these devices – from the remotely diagnosed elevator, to the sensors on our highways and throughout our environment. These embedded devices will share a key attribute with non-embedded UI-centric devices: they’re appliance-like, easily configured, interchangeable and replaceable without loss.

At first blush, this world of

continuous services and

connected devices doesn’t seem very different than today. But those who build, deploy and manage today’s websites understand viscerally that fielding a truly continuous service is incredibly difficult and is only achieved by the most sophisticated high-scale consumer websites. And those who build and deploy application fabrics targeting connected devices understand how challenging it can be to simply & reliably just ‘sync’ or ‘stream’. To achieve these seemingly simple objectives will require dramatic innovation in human interface, hardware, software and services.

How It Might Happen

From the perspective of living so deeply within the world of the device-centric software & hardware that we’ve collectively created over the past 25 years, it’s understandably difficult to imagine how a dramatic, wholesale shift toward this new

continuous services + connected devices model would ever plausibly gain traction relative to what’s so broadly in use today. But in the technology world, these industry-scoped transformations have indeed happened before. Complexity accrues; dramatically new and improved capabilities arise.

Many years ago when the PC first emerged as an alternative to the mini and mainframe, the key facets of

simplicity and

broad approachability were key to its amazing success. If there’s to be a next wave of industry reconfiguration – toward a world of internet-connected

continuous services and appliance-like

connected devices – it would likely arise again from those very same facets.

It may take quite a while to happen, but I believe that in some form or another, without doubt, it will.

For each of us who can clearly envision the end-game, the opportunity is to recognize both the inevitability and value inherent in the

big shift ahead, and to do what it takes to lead our customers into this new world.

In the short term, this means imagining the ‘killer apps & services’ and ‘killer devices’ that match up to a broad range of customer needs as they’ll evolve in this new era. Whether in the realm of communications, productivity, entertainment or business, tomorrow’s experiences & solutions are likely to differ significantly even from today’s most successful apps. Tomorrow’s experiences will be inherently transmedia & trans-device. They’ll be centered on your own social & organizational networks. For both individuals and businesses, new consumption & interaction models will change the game. It’s inevitable.

To deliver what seems to be required – e.g. an amazing level of coherence across apps, services and devices – will require innovation in user experience, interaction model, authentication model, user data & privacy model, policy & management model, programming & application model, and so on. These platform innovations will happen in small, progressive steps, providing significant opportunity to lead. In adapting our strategies, tactics, plans & processes to deliver what’s required by this new world, the opportunity is simply

huge.

The one irrefutable truth is that in any large organization, any transformation that is to ‘stick’ must emerge from within. Those on the outside can strongly influence, particularly with their wallets. Those above are responsible for developing and articulating a compelling vision, eliminating obstacles, prioritizing resources, and generally setting the stage with a principled approach.

But the power and responsibility to truly effect transformation exists in no small part

at the edge. Within those who, led or inspired, feel personally and collectively motivated to

make; to

act; to

do.

In taking the time to read this, most likely it’s

you.

Realizing a Dream

In 1939, in New York City, there was an amazing World’s Fair. It was called ‘the greatest show of all time’.

In that year Americans were exhausted, having lived through a decade of depression. Unemployment still hovered above 17%. In Europe, the next world war was brewing. It was an undeniably dark juncture for us all.

And yet, this

1939 World’s Fair opened in a way that evoked broad and acute hope: the promise of a glorious future. There were pavilions from industry & countries all across the world showing vision; showing progress: The Futurama; The World of Tomorrow. Icons conjuring up images of the future: The Trylon; The Perisphere.

The fair’s theme:

Dawn of a New Day.

Surrounding the event,

stories were written and

vividly told to help everyone envision and dream of a future of modern conveniences; superhighways & spacious suburbs; technological wonders to alleviate hardship and improve everyday life.

The fair’s exhibits and stories laid a broad-based imprint across society of what needed to be done. To plausibly leap from such a dark time to such a potentially wonderful future meant having an attitude, individually and collectively, that we could achieve

whatever we set our minds to. That

anything was possible.

In the following years – fueled both by what was necessary for survival

and by our hope for the future – manufacturing jumped 50%. Technological breakthroughs abounded. What had been so hopefully and optimistically imagined by many, was achieved by all.

And, as their children, now

we’re living their dreams.

Today, in my own dreams, I see a great, expansive future for our industry and for our company – a future of amazing, pervasive cloud-centric experiences delivered through a world of innovative devices that surround us.

Without a doubt, as in 1939 there are conditions in our society today that breed uncertainty: jobs, housing, health, education, security, the environment. And yes, there are also challenging conditions for our company: it’s a tough, fast-moving, and highly competitive environment.

And yet, even in the presence of so much uncertainty, I feel an acute sense of hope and optimism.

When I look forward, I can’t help but see the potential for a much brighter future: Even beyond the first billion, so many more people using technology to improve their lives, businesses and societies, in so many ways. New apps, services & scenarios in communications, collaboration & productivity, commerce, education, health care, emergency management, human services, transportation, the environment, security – the list goes on, and on, and on.

We’ve got

so far to go before we even scratch the surface of what’s now possible. All these new services will be cloud-centric ‘continuous services’ built in a way that we can all rely upon. As such, cloud computing will become pervasive for developers and IT – a shift that’ll catalyze the transformation of infrastructure, systems & business processes across all major organizations worldwide. And all these new services will work hand-in-hand with an unimaginably fascinating world of devices-to-come. Today’s PC’s, phones & pads are just the very beginning; we’ll see decades to come of incredible innovation from which will emerge all sorts of ‘connected companions’ that we’ll wear, we’ll carry, we’ll use on our desks & walls and the environment all around us. Service-connected devices going far beyond just the ‘screen, keyboard and mouse’: humanly-natural ‘conscious’ devices that’ll see, recognize, hear & listen to you and what’s around you, that’ll feel your touch and gestures and movement, that’ll detect your proximity to others; that’ll sense your location, direction, altitude, temperature, heartbeat & health.

Let there be no doubt that the big shifts occurring over the next five years ensure that this will absolutely be a time of great opportunity for those who put past technologies & successes into perspective, and envision all the transformational value that can be offered moving forward to individuals, businesses, governments and society. It’s the dawn of a new day – the sun having now arisen on a world of

continuous services and

connected devices.

And so, as Microsoft has done so successfully over the course of the company’s history, let’s mark this five-year milestone by once again fearlessly embracing that which is technologically inevitable – clearing a path to the extraordinary opportunity that lies ahead for us, for the industry, and for our customers.

Ray