Quick Reference: Hadoop Tools Ecosystem: "

Just a quick reference of the continuously growing Hadoop tools ecosystem.

Hadoop

The Apache Hadoop project develops open-source software for reliable, scalable, distributed computing.

hadoop.apache.org

HDFS

A distributed file system that provides high throughput access to application data.

hadoop.apache.org/hdfs/

MapReduce

A software framework for distributed processing of large data sets on compute clusters.

Amazon Elastic MapReduce

Amazon Elastic MapReduce is a web service that enables businesses, researchers, data analysts, and developers to easily and cost-effectively process vast amounts of data. It utilizes a hosted Hadoop framework running on the web-scale infrastructure of Amazon Elastic Compute Cloud (Amazon EC2) and Amazon Simple Storage Service (Amazon S3).

aws.amazon.com/elasticmapreduce/

Cloudera Distribution for Hadoop (CDH)

Cloudera’s Distribution for Hadoop (CDH) sets a new standard for Hadoop-based data management platforms.

cloudera.com/hadoop

ZooKeeper

A high-performance coordination service for distributed applications. ZooKeeper is a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services.

hadoop.apache.org/zookeeper/

HBase

A scalable, distributed database that supports structured data storage for large tables.

hbase.apache.org

Avro

A data serialization system. Similar to

☞ Thrift and

☞ Protocol Buffers.

avro.apache.org

Sqoop

Sqoop (“SQL-to-Hadoop”) is a straightforward command-line tool with the following capabilities:

- Imports individual tables or entire databases to files in HDFS

- Generates Java classes to allow you to interact with your imported data

- Provides the ability to import from SQL databases straight into your Hive data warehouse

cloudera.com/downloads/sqoop/

Flume

Flume is a distributed, reliable, and available service for efficiently moving large amounts of data soon after the data is produced.

archive.cloudera.com/cdh/3/flume/

Hive

Hive is a data warehouse infrastructure built on top of Hadoop that provides tools to enable easy data summarization, adhoc querying and analysis of large datasets data stored in Hadoop files. It provides a mechanism to put structure on this data and it also provides a simple query language called Hive QL which is based on SQL and which enables users familiar with SQL to query this data. At the same time, this language also allows traditional map/reduce programmers to be able to plug in their custom mappers and reducers to do more sophisticated analysis which may not be supported by the built-in capabilities of the language.

hive.apache.org

Pig

A high-level data-flow language and execution framework for parallel computation. Apache Pig is a platform for analyzing large data sets that consists of a high-level language for expressing data analysis programs, coupled with infrastructure for evaluating these programs. The salient property of Pig programs is that their structure is amenable to substantial parallelization, which in turns enables them to handle very large data sets.

pig.apache.org

Oozie

Oozie is a workflow/coordination service to manage data processing jobs for Apache Hadoop. It is an extensible, scalable and data-aware service to orchestrate dependencies between jobs running on Hadoop (including HDFS, Pig and MapReduce).

yahoo.github.com/oozie

Cascading

Cascading is a Query API and Query Planner used for defining and executing complex, scale-free, and fault tolerant data processing workflows on a Hadoop cluster.

cascading.org

Cascalog

Cascalog is a tool for processing data on Hadoop with Clojure in a concise and expressive manner. Cascalog combines two cutting edge technologies in Clojure and Hadoop and resurrects an old one in Datalog. Cascalog is high performance, flexible, and robust.

github.com/nathanmarz/cascalog

HUE

Hue is a graphical user interface to operate and develop applications for Hadoop. Hue applications are collected into a desktop-style environment and delivered as a Web application, requiring no additional installation for individual users.

archive.cloudera.com/cdh3/hue

You can read more about HUE on

☞ Cloudera blog.

Chukwa

Chukwa is a data collection system for monitoring large distributed systems. Chukwa is built on top of the Hadoop Distributed File System (HDFS) and Map/Reduce framework and inherits Hadoop’s scalability and robustness. Chukwa also includes a flexible and powerful toolkit for displaying, monitoring and analyzing results to make the best use of the collected data.

incubator.apache.org/chukwa/

Mahout

A Scalable machine learning and data mining library.

mahout.apache.org

Integration with Relational databases

Integration with Data Warehouses

The only list I have is

MapReduce, RDBMS, and Data Warehouse, but I’m afraid it is quite a bit old. So maybe someone could help me update it.

Anything else? Once we validate this list, I’ll probably have to move it on the

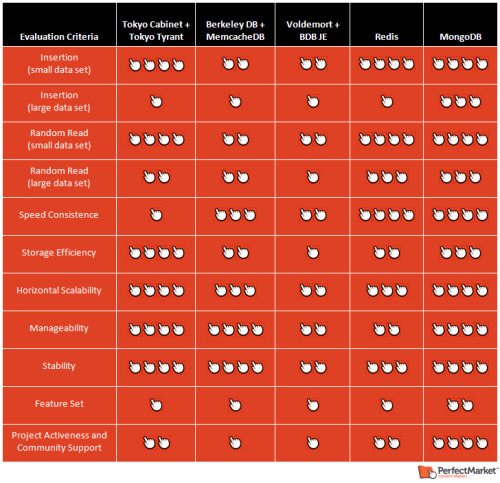

NoSQL reference

Original title and link:

Quick Reference: Hadoop Tools Ecosystem (

NoSQL databases © myNoSQL)

"